A DAG — Directed Acyclic Graph — is the secret sauce of data orchestration, the invisible scaffolding behind your pipelines, workflows, and machine learning jobs. And if you hang around data engineers long enough, you’ll hear them talk about DAGs the way guitar nerds talk about vintage amps — reverently, obsessively, and occasionally with swearing.

A DAG is basically a flowchart with commitment issues. It connects tasks in a specific order — each task pointing to the next — but never loops back on itself. (That’s the acyclic part. If it loops, congratulations, you’ve built a time machine or an infinite while loop. Either way, someone’s pager is going off at 3 a.m.)

A DAG Creates Order in a Sea of Chaos

In a world where every tool wants to be “event-driven” or “serverless,” DAGs are refreshingly concrete. They say, “Do this, then that, but only after those two other things are done.” It’s structure. It’s logic. It’s your data engineer finally getting to sleep because Airflow stopped running tasks out of order.

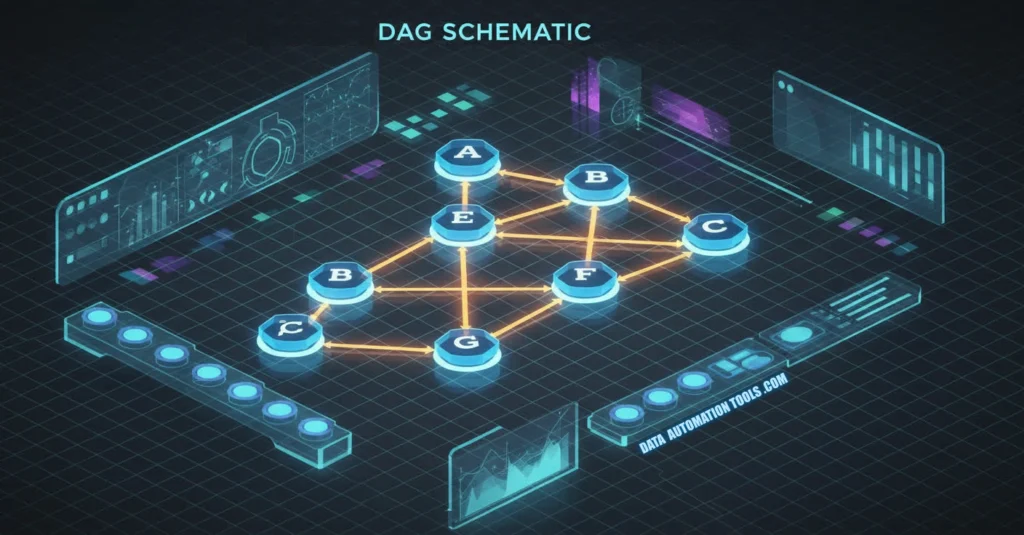

Every DAG is made up of nodes (tasks) and edges (dependencies). You might have a simple one:

Extract → Transform → LoadOr something that looks like a plate of linguine: dozens of parallel branches converging into a final aggregation step. The point is, DAGs give you control — over sequencing, dependencies, retries, and scheduling.

Without DAGs, your workflows are chaos. With them, they’re predictable chaos, which is really the best you can hope for in data engineering.

The DAG Hall of Fame

| Platform | DAG Style | Developer Mood |

|---|---|---|

| Apache Airflow | Python-defined, cron-powered | “It works until it doesn’t.” |

| Prefect | Python-native, cloud-first | “Less YAML, more joy.” |

| Dagster | Type-safe, declarative, nerdy in a good way | “We do data engineering properly.” |

| Luigi | Old-school, dependable | “Still works after 10 years. Respect.” |

DAGs show up everywhere — not just in orchestration tools. Machine learning pipelines, build systems (like Bazel), even CI/CD tools (like GitHub Actions) use DAGs under the hood. Once you start seeing them, you can’t unsee them.

Why Engineers Love Them (and Hate Them)

Engineers love DAGs because they make complex workflows understandable. They’re visual logic. You can open a graph view in Prefect or Airflow and literally watch your data move — extraction, transformation, loading, alerts. It’s satisfying, like watching trains hit all the right stations on schedule.

But DAGs are also the source of much developer pain. One bad dependency, and your entire graph halts. Circular references? Nightmare fuel. Misconfigured retries? Endless loops of failure. Debugging a misbehaving DAG feels like therapy — you’re tracing your past mistakes, hoping you’ve finally broken the cycle.

Still, DAGs are indispensable because they represent something deeper: determinism. In a stack full of unpredictable APIs, flaky endpoints, and non-idempotent scripts, DAGs enforce order. They tell your infrastructure, “This is how we do things, every time.”

The DAG Future: Smarter, Dynamic, and Self-Healing

The new generation of tools — Prefect 2.0, Dagster, Flyte — are evolving DAGs beyond static definitions. They’re becoming dynamic, reactive, and sometimes even self-healing. No more hard-coded task graphs — now you can generate DAGs on the fly, respond to upstream data changes, and rerun only what’s broken.

We’re moving toward intelligent DAGs — workflows that understand their own dependencies and recover gracefully. Airflow walked so Dagster could run type checks and Prefect could throw cheeky runtime warnings.

Professor Packetsniffer Sez

DAGs aren’t sexy. They’re not new. But they’re essential. They’re how you keep thousands of moving parts from eating each other alive.

In a world obsessed with “AI everything,” DAGs are a humble reminder that logic still matters. They’re the backbone of reliability in an unpredictable universe — the thing that makes your pipelines reproducible, debuggable, and, dare we say, civilized.

So next time you see a perfect DAG visualization — all green, no retries, no errors — take a screenshot. Frame it. Because that, right there, is the rarest thing in data engineering: peace.